📍 Location Finder - Ecuador

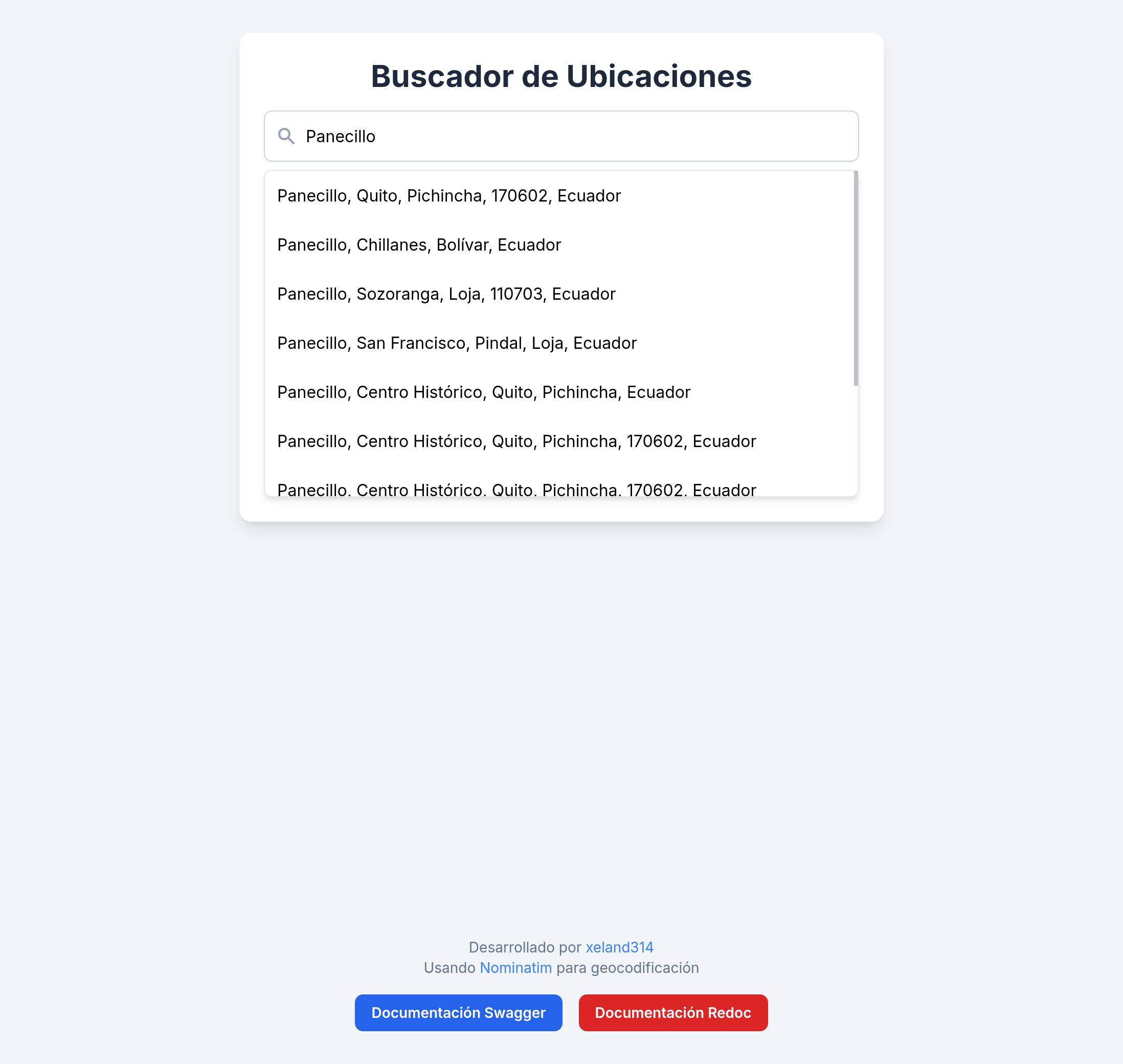

This project is a location finder that uses a local Nominatim server, filtering only results within Ecuador since I have locally configured Nominatim with data only from my country.

✨ Features

- Created with FastAPI and a simple HTML frontend.

- Redirects user requests to the local Nominatim server.

- Displays results on an HTML page.

- Allows viewing a history of searches.

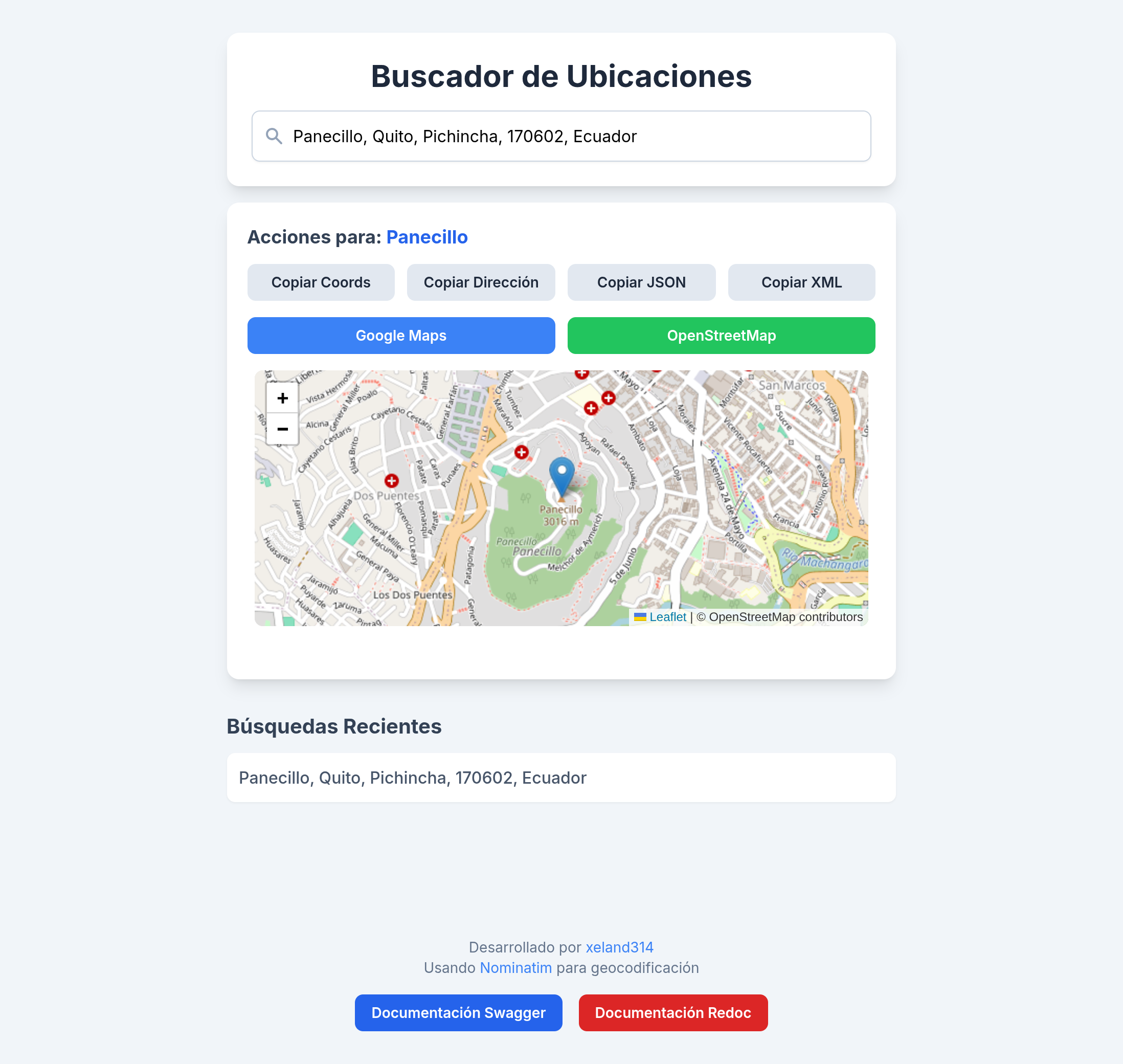

- Easily copy the coordinates of a location.

- Option to open the location directly in Google Maps.

- Displays the selected location on an interactive map using Leaflet.

- Allows copying responses in JSON and XML formats.

- Access to interactive API documentation via Swagger and ReDoc.

💡 Usage

- Enter an address or place in Ecuador.

- View the results and select the desired location.

- Observe the location on the interactive map.

- Copy the coordinates or the responses in JSON or XML format.

- Open the location in Google Maps or OSM.

- Check the recent search history.

-

Access the API documentation at

/docs(Swagger) or/redoc(ReDoc).

🤖 Scoring System

The project uses a technique to improve the relevance of autocomplete suggestions based on actual user usage. When a user selects a location, this "feedback" is recorded in a SQLite database.

This system assigns a popularity "boost" to the most selected results, causing them to appear at the top of future searches. In this way, the search engine "learns" which locations are most relevant to users in Ecuador. This process is similar to reinforcement learning, where the system optimizes itself based on user interactions.

Technical Operation

- SQLite is used for persistent, long-term storage of user feedback.

- A custom AutocompleteScorer combines popularity from the database with factors like fuzzy matching, Nominatim relevance, and place type to generate a final score for each suggestion.

Bootstrap Feedback

If you don't have users but want to simulate user responses or prioritize

certain places, you can use the bootstrap_feedback.py script to

generate initial feedback. This script allows you to load location data

and assign an initial score, which helps establish a starting point for

the scoring system.

import sysimport concurrent.futuresimport threadingimport requestsfrom fuzzywuzzy import fuzz# Base URL of the FastAPI applicationFASTAPI_BASE_URL = "http://127.0.0.1:8089"# --- Path to the seed queries file ---SEED_QUERIES_FILE = "seed_queries.txt"# --- Thresholds for considering a suggestion "good" or "popular" ---MIN_NOMINATIM_IMPORTANCE = 0.01MIN_FUZZY_RATIO = 40MIN_TOKEN_SET_RATIO = 70# --- Threading Configuration ---# Number of concurrent threads.MAX_WORKERS = 10# Lock to protect console printing (to avoid messy output)print_lock = threading.Lock()def load_seed_queries(file_path: str) -> list[str]:"""Loads seed queries from a text file."""queries = []try:with open(file_path, "r", encoding="utf-8") as f:for line in f:query = line.strip()if query and not query.startswith("#"): # Ignore empty lines or commentsqueries.append(query)except FileNotFoundError:print(f"Error: The file '{file_path}' was not found.")sys.exit(1) # Use sys.exit to exit the scriptreturn queriesdef record_simulated_selection(query: str, selected_item: dict):"""Simulates sending feedback to the FastAPI endpoint."""feedback_url = f"{FASTAPI_BASE_URL}/feedback"payload = {"query": query,"selected_item": {"osm_id": selected_item.get("osm_id"),"display_name": selected_item.get("display_name"),"lat": selected_item.get("lat"),"lon": selected_item.get("lon"),"type": selected_item.get("type"),},}try:response = requests.post(feedback_url, json=payload, timeout=5)response.raise_for_status()with print_lock: # Protect printingprint(f" ✅ Successful simulation: '{selected_item.get('display_name')}' for query '{query}'")return True # Indicate successexcept requests.exceptions.RequestException as e:with print_lock: # Protect printingprint(f" ❌ Error sending simulated feedback for '{query}': {e}")return False # Indicate failuredef get_autocomplete_suggestions(query: str):"""Gets suggestions from your own /autocomplete endpoint."""autocomplete_url = f"{FASTAPI_BASE_URL}/autocomplete?query={query}"try:response = requests.get(autocomplete_url, timeout=10)response.raise_for_status()return response.json()except requests.exceptions.RequestException as e:with print_lock: # Protect printingprint(f" Error getting suggestions from FastAPI for '{query}': {e}")return []def process_query(query: str):"""Function that processes a single query and simulates selection.Designed to be run by a thread."""suggestions = get_autocomplete_suggestions(query)if not suggestions:with print_lock:print(f" ⚠️ No suggestions obtained from your API for '{query}'.")return 0 # No selection was simulatedbest_suggestion_for_query = Nonehighest_combined_bootstrap_score = -1for item in suggestions:display_name = item.get("display_name", "")nominatim_importance = float(item.get("importance", 0.0))query_lower = query.lower()display_name_lower = display_name.lower()fuzz_ratio = fuzz.ratio(query_lower, display_name_lower)token_set_ratio = fuzz.token_set_ratio(query_lower, display_name_lower)passes_importance = nominatim_importance >= MIN_NOMINATIM_IMPORTANCEpasses_fuzzy_or_token_set = (fuzz_ratio >= MIN_FUZZY_RATIO) or (token_set_ratio >= MIN_TOKEN_SET_RATIO)current_bootstrap_score = ((nominatim_importance * 0.4) + (token_set_ratio * 0.4) + (fuzz_ratio * 0.2))with print_lock: # Protect printing to avoid console clutterprint(f" - Suggestion: '{display_name}' (Imp: {nominatim_importance:.2f}, Fuzz: {fuzz_ratio}, TokenSet: {token_set_ratio})")if passes_importance and passes_fuzzy_or_token_set:print(f" 👍 Qualifies: Imp={passes_importance}, (Fuzz={fuzz_ratio}>={MIN_FUZZY_RATIO} OR TokenSet={token_set_ratio}>={MIN_TOKEN_SET_RATIO})")if current_bootstrap_score > highest_combined_bootstrap_score:highest_combined_bootstrap_score = current_bootstrap_scorebest_suggestion_for_query = itemelse:imp_fail = (f"(Imp: {nominatim_importance:.2f} < {MIN_NOMINATIM_IMPORTANCE})"if not passes_importanceelse "")fuzzy_fail = (f"(Fuzz: {fuzz_ratio} < {MIN_FUZZY_RATIO})"if (fuzz_ratio >= MIN_FUZZY_RATIO)else "")token_set_fail = (f"(TokenSet: {token_set_ratio} < {MIN_TOKEN_SET_RATIO})"if not (token_set_ratio >= MIN_TOKEN_SET_RATIO)else "")print(f" 👎 Does not qualify: Imp: {passes_importance} {imp_fail}, Fuzz/TokenSet: {passes_fuzzy_or_token_set} ({fuzzy_fail} {token_set_fail})")if best_suggestion_for_query:record_simulated_selection(query, best_suggestion_for_query)return 1 # 1 selection was simulatedelse:with print_lock:print(f" ❌ No 'good' suggestion found for '{query}' under the defined thresholds.")return 0 # No selection was simulateddef bootstrap_feedback_db():"""Simulates interactions to pre-populate the feedback database using threads."""print("Starting feedback database bootstrapping...")# Load seed queries from the fileseed_queries = load_seed_queries(SEED_QUERIES_FILE)if not seed_queries:print("No queries found in the seed file. Aborting.")return# Make sure the FastAPI server is running before executing thistry:requests.get(f"{FASTAPI_BASE_URL}/docs", timeout=5)print("FastAPI server accessible.")except requests.exceptions.ConnectionError:print("Error: The FastAPI server is not running at", FASTAPI_BASE_URL)print("Please start your FastAPI application before running this script.")returntotal_simulated_selections = 0# Use ThreadPoolExecutor to run queries in parallelwith concurrent.futures.ThreadPoolExecutor(max_workers=MAX_WORKERS) as executor:# Map the process_query function to each seed query# as_completed returns results as threads finishfutures = {executor.submit(process_query, query): query for query in seed_queries}for future in concurrent.futures.as_completed(futures):query = futures[future]try:# Each thread returns 1 if a selection was simulated, 0 otherwise.total_simulated_selections += future.result()except concurrent.futures.CancelledError as exc:with print_lock:print(f"The query '{query}' was cancelled: {exc}")except concurrent.futures.TimeoutError as exc:with print_lock:print(f"The query '{query}' exceeded the timeout: {exc}")except ValueError as exc:with print_lock:print(f"The query '{query}' generated a ValueError: {exc}")except TypeError as exc:with print_lock:print(f"The query '{query}' generated a TypeError: {exc}")except requests.exceptions.RequestException as exc:with print_lock:print(f"The query '{query}' generated an HTTP request error: {exc}")print("--- Bootstrapping Complete ---")print(f"Total simulated selections: {total_simulated_selections}")# Note: The DB_PATH is not printed directly from here, as cache.py already does it.print("The feedback database has been updated.")print("--- ATTENTION! ---")print(f"Concurrency was used with {MAX_WORKERS} threads.")print("This may cause your FastAPI server's rate limits to be reached more quickly.")print("If you see many 429 errors, consider reducing the number of MAX_WORKERS or increasing the limits in your 'rate_limiter.py'.")print("You can also adjust the thresholds (MIN_NOMINATIM_IMPORTANCE, MIN_FUZZY_RATIO, MIN_TOKEN_SET_RATIO) in this script.")print(f"You can add/modify queries in '{SEED_QUERIES_FILE}'.")if __name__ == "__main__":try:_ = fuzz.ratio("test", "test")except ImportError:print("Error: The 'fuzzywuzzy' library is not installed.")print("Install it with: pip install fuzzywuzzy python-Levenshtein")sys.exit(1)bootstrap_feedback_db()

🚀 Query Caching with Redis

To speed up responses and reduce the load on the local Nominatim server, a short-term cache layer is implemented using Redis.

- Upon receiving a query, the API first attempts to get the result from the Redis cache.

- If the query has been made recently (within 5 minutes), the response is served instantly from the cache.

- If there is no response in the cache, the query is made to the Nominatim server, processed, and the final result is saved in Redis for future requests.

This strategy significantly improves performance for repeated searches, providing a faster and more efficient user experience.

🛠️ Requirements

- Python 3.8+

- FastAPI

- Local Nominatim server (although this one is configured only with data from Ecuador)

📦 Installation

pip install fastapi uvicorn requests python-multipart

🚀 Execution

uvicorn main:app --reload

⚠️ Disclaimer

This project is designed solely for personal use or in controlled environments with a local Nominatim server configured with data from Ecuador.

Warning:

Do not use this search engine with the original Nominatim server or with platforms that do not allow the use of autocompletion or automated query redirection. Misuse may violate the terms of service and result in blocks or penalties.